Recently I was engaged with a full vSphere migration for one of our customers (both hardware and software). We faced an issue getting Link Layer Discovery Protocol (LLDP) to work with the new PowerEdge R730 ESXi hosts. Let’s start with some concepts and then I will share my field experience so that you know exactly what was the situation and how it should be tackled in case you face it.

Why use LLDP?

Similar to Cisco’s Discovery Protocol (CDP), Link Layer Discovery Protocol (LLDP) is a layer 2 neighbor discovery protocol that allows devices to advertise device information to their directly connected peers/neighbors. The big difference between the two is that LLDP is vendor-neutral while CDP is a Cisco proprietary protocol.

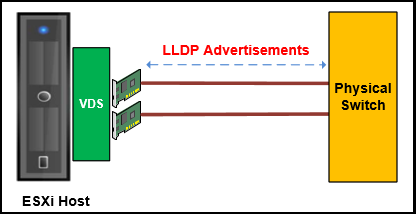

Another difference is that CDP is supported with both vSphere Standard Switches (VSS) while LLDP is only supported on vSphere Distributed Switches (VDS). As with most things in life, the more expensive distributed switch has the better bells and whistles. VDS is included in vSphere Enterprise Plus licensing and it comes with any Standard license for either VSAN or NSX.

Using LLDP, device information such as chassis identification, port ID, port description, system name and description, device capability (as router, switch, hub…), IP/MAC address, etc., are transmitted to the neighboring devices. This information is also stored in local Management Information Databases (MIBs), and can be queried with the Simple Network Management Protocol (SNMP). The LLDP-enabled devices have an LLDP agent installed in them, which sends out advertisements from all physical interfaces either periodically or as changes occur.

With Link Layer Discovery Protocol (LLDP), vSphere administrators can determine which physical switch port connects to a given vSphere distributed switch. When LLDP is enabled for a particular distributed switch, you can view properties of the physical switch (such as chassis ID, system name and description, and device capabilities) from the vSphere Web Client.

How to enable LLDP on vSphere VDS switch?

LLDP should be enabled on both physical switches and vSphere VDS switches so that advertised information will be received between neighbors.

To enable LLDP on VDS switch:

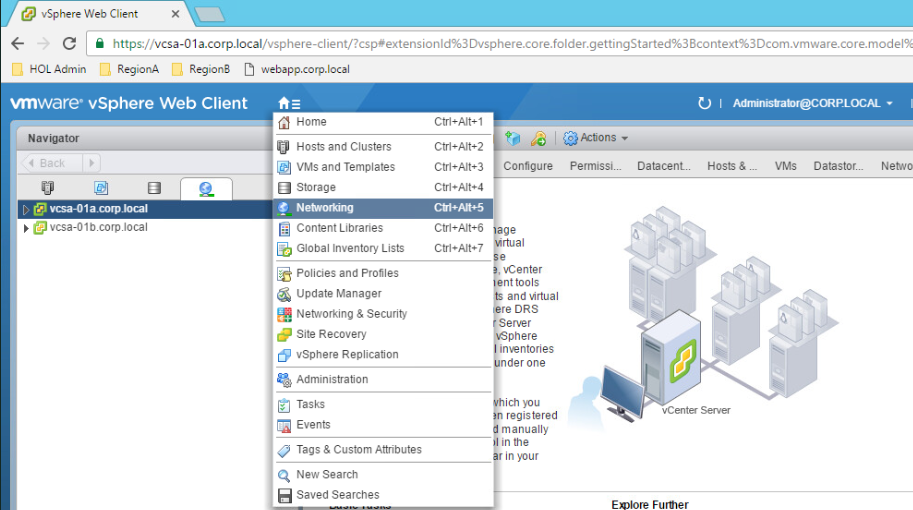

Login to vSphere web client and navigate to Networking section.

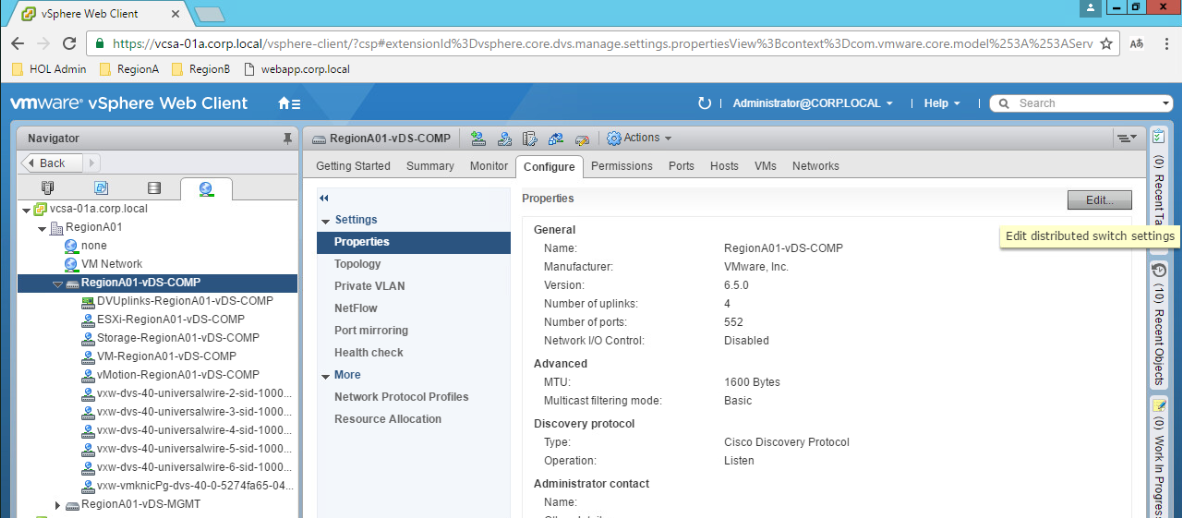

Choose your VDS switch, navigate to Configure tab and select Properties under settings left pane.

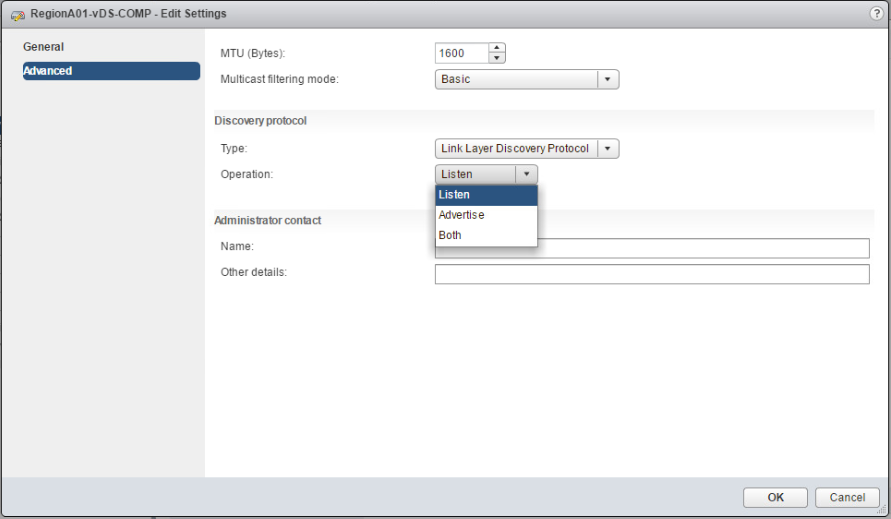

Click Edit. Click on Advanced and choose LLDP as your discovery protocol.

Select the LLDP mode from the Operation drop-down menu.

- Listen: ESXi detects and displays information about the associated physical switch port, but information about the vSphere distributed switch is not available to the switch administrator.

- Advertise:ESXi makes information about the vSphere distributed switch available to the switch administrator, but does not detect and display information about the physical switch.

- Both: ESXi detects and displays information about the associated physical switch and makes information about the vSphere distributed switch available to the switch administrator.

Our Issue

So you know now what is LLDP and how to enable it from vSphere VDS side. Let’s delve into our case and start by describing what we have and the issue we faced.

We have the below environment:

- DELL PowerEdge R730 Servers.

- vSphere ESXi 6.5 U1 is running on these hosts.

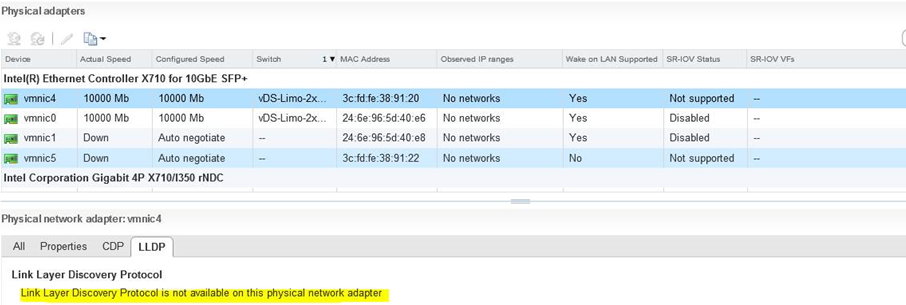

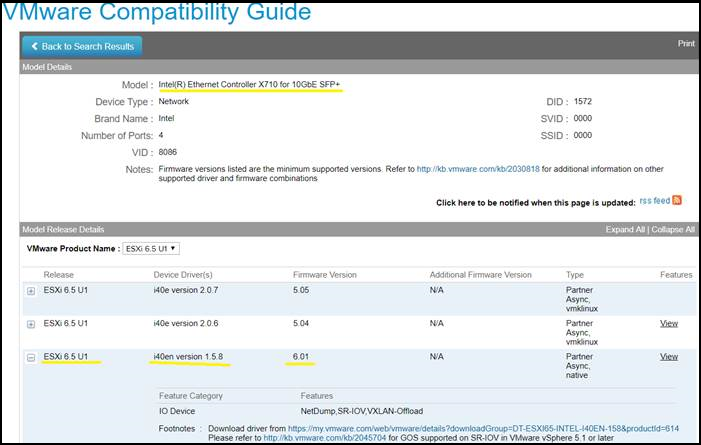

- Dual-Port Intel Ethernet Controller X710 for 10GBE SFP+ cards being used.

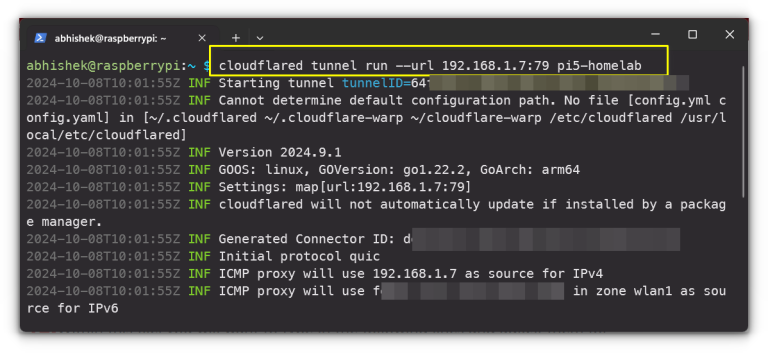

LLDP was already enabled from the physical network side. So, we enabled LLDP on the VDS switch with 10GB uplinks. However, no LLDP frames are detected in both directions (in/out) from ESXi side. In addition, the following message appears on our physical uplinks:

Link Layer Discovery Protocol is not available on this physical network adapter.

Investigation

What we noticed is the below:

- If we enable CDP, its works interestingly without any issue.

- LLDP is working fine on other 1GB ports.

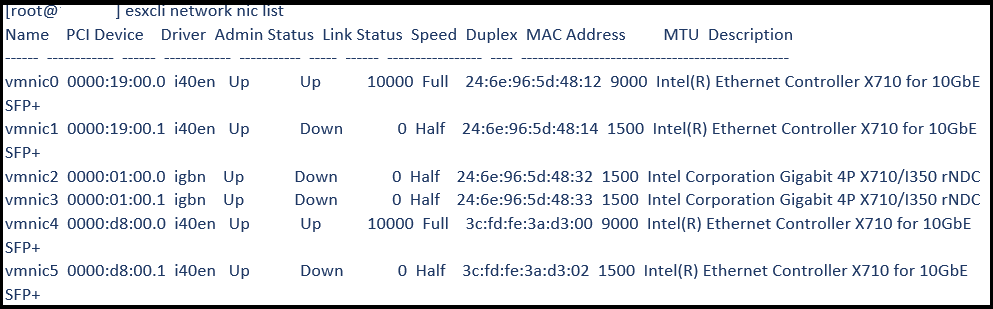

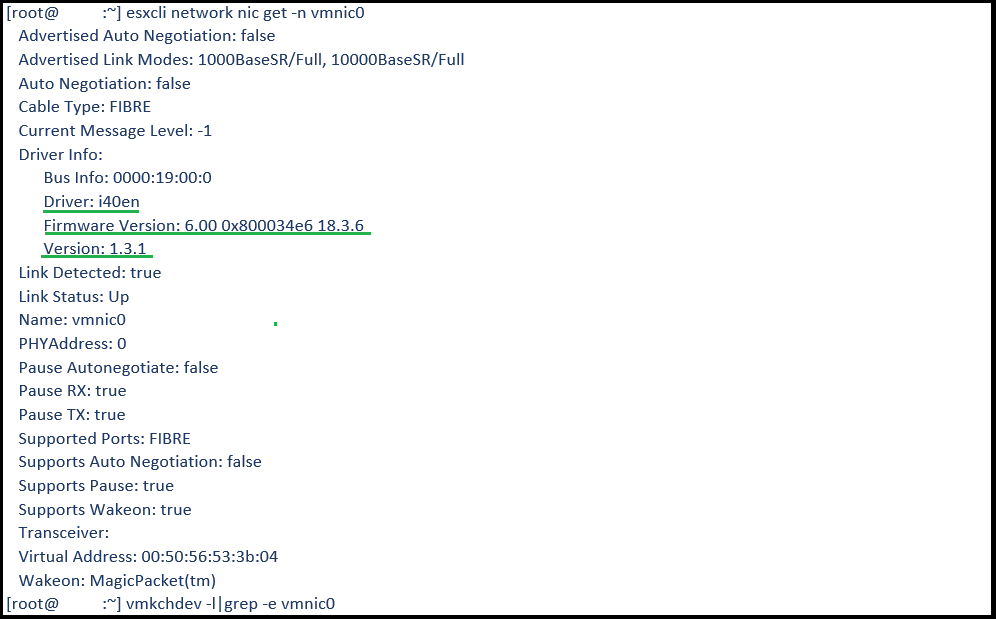

Checking the current driver and firmware being used by the 10GB ports, they are using i40en driver version 1.3.1 and firmware version 6.0.

Solution

From my background expertise, with such weird issues, the first thing to check is the driver/firmware of the adapter, and here where the solution comes from.

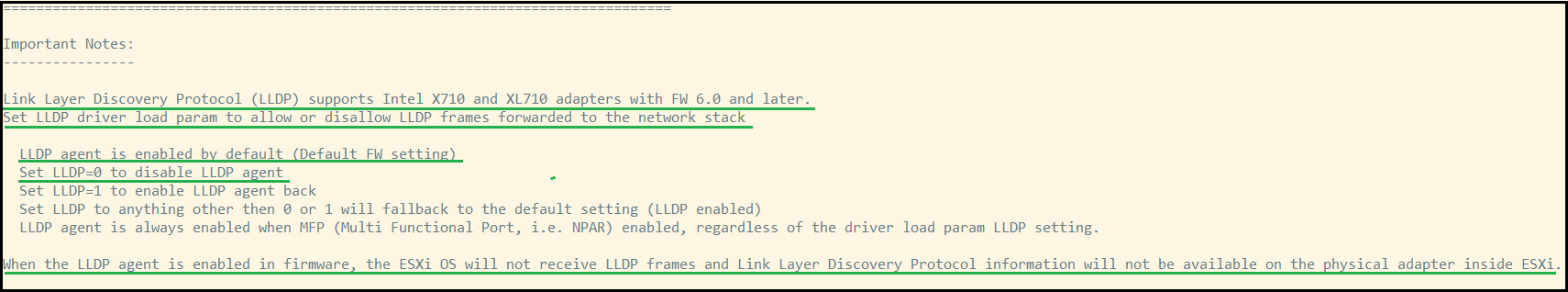

Reading the release notes of the latest i40en driver version 1.5.8, you can understand everything about the issue:

https://my.vmware.com/group/vmware/details?downloadGroup=DT-ESXI65-INTEL-I40EN-158&productId=614

From the LACP configuration guide of Intel X700 series converged adapters, the here under is available:

https://www.intel.com/content/dam/www/public/us/en/documents/brief/lacp-config-guide.pdf?asset=16376

Each Intel® Ethernet 700 Series Network Adapter has a built-in hardware LLDP engine, which is enabled by default. The LLDP Engine is responsible for receiving and consuming LLDP frames, and also replies to the LLDP frames that it receives. The LLDP engine does not forward LLDP frames to the network stack of the Operating System.

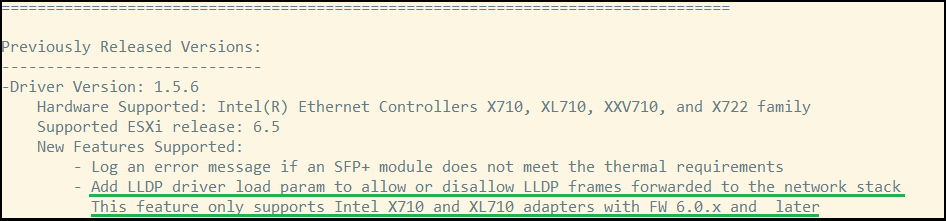

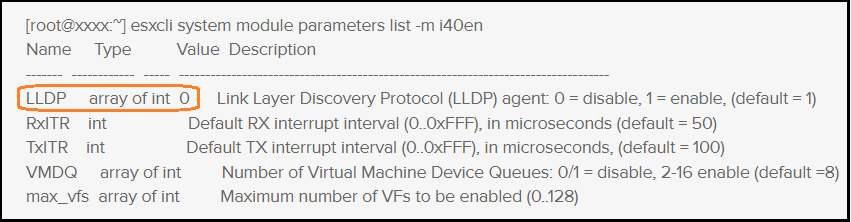

So, We need to disable the LLDP agent in the adapter firmware or the ESXi OS will not receive LLDP frames and Link Layer Discovery Protocol information will not be available on the physical adapter inside ESXi. To do so, we need to update the driver from 1.3.1 currently to a minimum version of 1.5.6.

Checking VMware Compatibility Guide, you can see that the latest driver 1.5.8 is compatible with vSphere 6.5 U1 as per the below:

https://www.vmware.com/resources/compatibility/detail.php?deviceCategory=io&productid=37976&deviceCategory=io&details=1&partner=46&releases=367&keyword=x710&page=1&display_interval=10&sortColumn=Partner&sortOrder=Asc

The below actions have been done to solve the LLDP issue:

This should disable internal LLDP agent on network card so that it will pass LLDP to ESXi.

After that LLDP worked as expected and ESXi hosts were able to send/receive LLDP frames.

Note: As this variable is an array of integers, LLDP=0,0 was used as we have dual-port adapters. If you have quad-port adapter, you should use LLDP=0,0,0,0.

Thanks for reading,

Share this post if you find it informative.

Mohamad Alhussein

(3 votes, average: 4.67 out of 5)

(3 votes, average: 4.67 out of 5)